Dear Reader,

One of the unfortunate consequences of rising interest rates and uncertain monetary and fiscal policy is the impact on the early-stage venture capital (VC) market.

It’s probably not something that we would normally think of. After all, the private markets are a lot less transparent than the public markets. And there’s no stock market index like the NASDAQ that reminds us daily about the health, or lack thereof, of early-stage investment activity.

But I watch the private markets closely. Not just because I’m an angel investor and invest in private companies; but because investments in early-stage businesses give us insight into what institutional capital is doing with its money, and the view that capital has on near-future economic conditions.

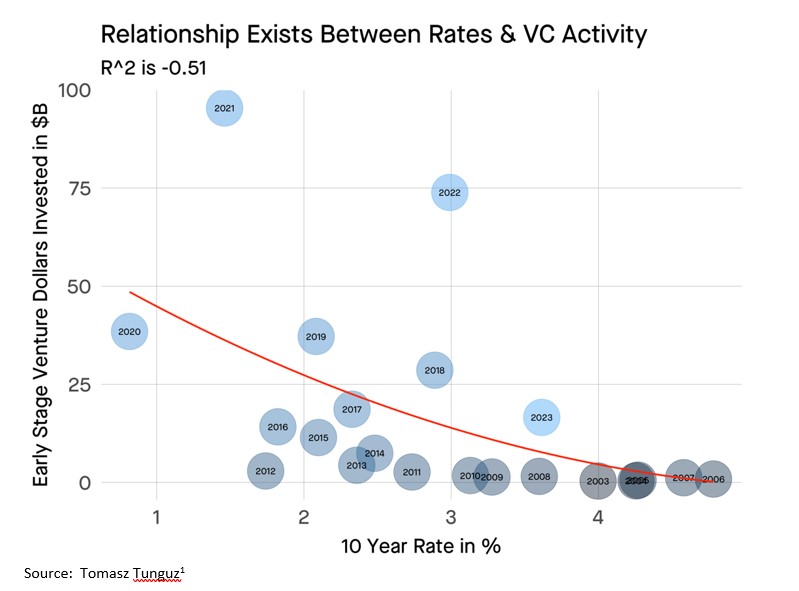

I found the image below a useful snapshot to demonstrate the relationship between higher interest rates and early-stage venture capital investment.

Source: Tomasz Tunguz

Above we can see that in general, as interest rates increase, overall levels of early-stage venture capital investments tend to decline. This data is just from the last two decades, and the interest rate is based on the 10-year U.S. Treasury rate.

It’s not a perfect correlation, but there’s a good reason for that. Institutional capital, like the kind of money that invests in venture capital funds as limited partners, has a lag time between cause and effect.

Venture capital funds raised record levels from limited partners in 2020 and 2021. Massive VC funds were raised, and there is always an incentive to deploy the funds within the first couple of years and put that capital to work. After all, limited partners aren’t investing in VC funds so that VCs can sit on the capital and earn interest. They expect the capital to be deployed quickly into high-growth potential companies in hopes of generating outsized returns.

That’s why 2022 was such an outlier. Despite a high and rising interest rate environment, the VCs were still deploying fresh capital that was raised prior to the economic calamity last year. And while VCs were investing last year into early-stage companies, institutional capital was quickly reducing investment into new VC funds. In fact, many funds in the industry returned capital last year to satisfy requests from limited partners and to acknowledge worsening economic conditions.

And with the Fed Funds rate already at 5% with the Federal Reserve alluding to even higher rates, we should expect that institutional capital will continue to move to safety. This capital movement reflects what that capital is expecting in the near future. And that expectation is that economic conditions will get worse, not better.

Sadly, this is what happens with a $1 trillion-plus annual budget deficit, a credit crunch, and rampant inflation. I expect that early-stage venture capital investment levels will be on par with 2017 or 2018 levels and will almost certainly be less than half of last year. It feels like we’re taking a massive step backwards…

This is painful to watch unfold. After all, investments in new early-stage companies are the lifeblood of innovation in most markets around the world. It is the investment in the next generation of great ideas and founders that are building new products and services that the world has never seen.

And naturally, these new companies are enormous job creation engines, as well as sources of new wealth creation for both investors and employees. And for free market countries, a healthy and vibrant early-stage venture capital sector is a matter of national competitiveness.

This model is precisely why the U.S., for example, is currently leading the world in breakthrough technologies like artificial intelligence (AI), robotics, genetic editing, and nuclear fusion technology. The best work in these sectors is not being done by governments. It’s happening at small private companies that have been funded by the venture capital and angel investing community.

It’s not all dire though. I’ve never seen so many exciting technological developments happening at the same time in my life. There are so many things to get excited about. The current dynamics just mean that we have to work harder to find great early-stage private companies.

The current early-stage market is dramatically slower than it was back in 2021 because even promising founders tend to become more conservative in tough economic conditions. There are still great deals out there. They just require a lot more leg work.

This is part of a natural ebb and flow for early-stage investment. And the positive signs that we’ll be looking for are an increase in VC fund raises, which we should expect to happen next year after the Federal Reserve is forced to reverse its policy position in an effort to recover from the mess that has been created the last few years.

After that, early-stage VC investment will rebound quickly as institutional capital rotates back into high-growth assets that thrive in healthy economic environments.

Just a few days ago, the U.S. Nuclear Regulatory Commission (NRC) unanimously voted for nuclear fusion energy to be regulated in the same category as particle accelerators, not nuclear fission reactors. This is huge.

As a reminder, nuclear fusion is essentially the power of the Sun. It involves taking two separate nuclei and combining them in a reactor under intense heat and pressure to form a new nucleus.

This produces an enormous amount of energy that’s 100% clean. And unlike nuclear fission, forms of nuclear fusion produce no radioactive waste.

Many experts are saying that nuclear fusion is still decades away. But they are wrong. Several approaches to nuclear fusion are getting very close to demonstrating nuclear fusion reactions and even net energy outputs… and this latest regulatory development is basically an acknowledgement of this fact.

That’s because there wouldn’t be a catalyst for the NRC to make a regulatory decision like this if the reality was that the technology was 10 or 20 years away. Clearly, those industry insiders recognize the tremendous progress that’s been made in the industry during the last two or three years.

The most recent and press-worthy catalyst is what happened at the Lawrence Livermore National Laboratory’s National Ignition Facility (NIF) back in December.

If we remember, the NIF demonstrated a nuclear fusion reaction that produced more energy than it consumed. That’s typically called “net-energy production.”

The key here is that if a nuclear reactor is capable of net-energy production, that means it can produce limitless clean energy. Yes, there is a large amount of energy required to get the reactor “charged up,” but with any sustainable fusion reaction, it doesn’t take long before the reactor is producing far more energy than is needed to maintain the reaction.

To be clear, the NIF’s experiment wasn’t done at scale and the reaction itself only ran for a few trillionths of a second. In that way, it was more of a proof of concept. But this was the first time we’ve seen a reactor achieve net-energy production. And that catalyst combined with a record level of private investment in nuclear fusion companies in 2022 prompted the NRC to make its decision on how fusion should be regulated.

Of course, this is great news. Regulatory clarity is critical to fostering a new industry’s growth.

And in addition to the regulatory clarity, the decision to treat fusion as different from fission was the right call.

As we’ve discussed, fusion reactions produce very little radioactive waste. And the waste that is produced only has a half-life of about 12 years. This compares to Urainium-235 which has a half-life measured in hundreds of millions of years. And some forms of fusion do not produce any radioactive waste at all. It makes perfect sense that they shouldn’t be regulated the same way as fission reactions that do produce a material amount of nuclear waste.

So the NRC got it right here. And this paves the way for the nuclear fusion industry to thrive. And hopefully, it will help dispel any false political narratives intended to conflate nuclear fission and nuclear fusion. If that were to happen, it could stifle progress towards commercial nuclear fusion – the planet’s best hope for 100% clean baseload power generation.

This year is an exciting year for the industry. On the back of a record year of funding in 2022, incredible progress is being made. And we should expect to see some prototype reactors come online next year from two or three promising private companies testing their fusion reactors.

Breakthroughs in nuclear fusion will transform the planet, and not just from the perspective of producing clean energy. Nuclear fusion-based energy will likely become the cheapest form of electricity in history, and the widespread availability of cheap energy has been one of the primary engines for the extraordinary economic growth that we’ve experienced over the last century.

One of my favorite new space exploration companies just launched its first mission. I’m incredibly excited about this one…

The company is called AstroForge. And its focus is on mining asteroids in space.

It might seem like a crazy idea, but the thought of asteroid mining isn’t new. Planetary Resources and Deep Space Industries were early entrants into the field – too early, in fact. Both were acquired for small sums in 2018/2019 as technology and launch costs were not in a place to enable commercially viable businesses.

But thanks to SpaceX, as we’ve discussed before, payload costs have fallen over 90% over the course of the last couple of decades. And once the SpaceX Starship is operational, launch costs will fall again by an additional 90%+. And that makes it far more economical for companies like AstroForge to make asteroid mining a reality.

For its first mission, AstroForge is sending its Brokkr-1 spacecraft up into orbit with minerals and rocks that are similar to what will be found on an asteroid. Equipment on the craft will then vaporize the materials and sift through everything to extract the key elements of value.

This will all be done while in zero gravity. It’s a proof-of-concept mission to confirm that the Brokkr-1 is capable of sifting through minerals in space. This is important because there is no need to haul low-value materials back to Earth (or the Moon). The key is to extract what is of value and leave the rest in space.

AstroForge plans to follow this up with a second mission in October. It aims to launch another craft to go out and scout an asteroid that’s about 20 million miles from Earth.

This is a reconnaissance mission as much as a test flight for arriving at and surveying an asteroid target.

This is exciting because there are about 9,000 small asteroids in orbit near Earth. It’s a target-rich environment that makes them accessible to companies like AstroForge.

What’s more, we believe these asteroids contain trillions of dollars worth of minerals and metals. That’s why asteroid mining makes so much sense.

For a visual, here’s an artist’s rendering depicting an asteroid mining operation:

Source: Mining.com

If we think about the rare earth metals that power our electronic devices and now electric vehicles (EVs), they are incredibly scarce on this planet and exist in very low concentrations in ore. Extracting rare earth elements is an extremely carbon-intensive process that causes substantial damage to the surrounding environment.

However, these same rare earth elements can exist in much higher concentrations in asteroids. If we can access and mine those metals in much higher concentrations, it is a far better approach to what happens on Earth. Companies like AstroForge could be absolutely revolutionary – both for the space economy and for life here on Earth.

I’m excited to follow AstroForge’s progress this year with its first two missions and look forward to further progress next year with the prototype mining spacecraft.

Ever since OpenAI released ChatGPT last December, we have seen one exciting development after another week after week. This week was no different.

We got a peek behind Google’s latest breakthrough in artificial intelligence-specific supercomputing that has been a key to accelerating developments in generative AI.

Specifically, Google provided some valuable performance details about its most recent Google Cloud tensor processing unit (TPU) v4 computing system. It is a system that is on par with the most powerful, classical supercomputer in the world – Frontier – which is maintained at the Oak Ridge National Laboratories.

We’ve talked about Google’s TPUs before. We can think of the TPUs as custom-designed semiconductors used specifically for artificial intelligence applications. Whereas the kinds of graphics processing units (GPUs) provided by semiconductor companies like NVIDIA and AMD are general purpose, TPUs are application specific. Specifically for AI and machine learning applications.

And the Cloud TPU v4 is an absolute powerhouse. It’s capable of ten times the performance of Google’s previous version. Here is a picture of about one-eighth of the total system:

Source: Google

Here we can see that the hardware features rack upon rack of interconnected high-end processors.

The significance here is that this hardware is capable of operating at an exaflop scale. That puts it on par with Frontier. For context, one exaflop refers to one quintillion floating-point operations per second. That’s some serious computing power.

And Google also developed and employed an optical circuit switching (OCS) technology in this supercomputer design. This is materially important because the design negates the need to switch from optical to electrical and back to optical again. This alone significantly reduces power consumption and increases the overall performance of the system.

Google estimates that the OCS technology improves energy efficiency by a factor of two or three times and reduces overall carbon emissions by about 20 times. Data centers have massive environmental footprints, so even small efficiency improvements can lead to large benefits.

The Google Cloud TPU v4 is the system upon which Google trained its own generative AI PaLM. That stands for “Pathways Language Model.” It’s a large language model very similar to OpenAI’s ChatGPT.

And here’s the key – Google managed to train PaLM on 540 billion parameters in just 50 days. That’s it. Not too long ago this would have taken years to accomplish. This is a great example of how advancements in computing hardware and semiconductors are key to driving accelerated developments in software (AI & machine learning).

Application-specific semiconductor companies targeting AI and machine learning will be one of the biggest stories of 2023. And while we may not be aware of all these advancements, we’ll feel the impact as AI software applications immediately impact both consumers and businesses alike.

Regards,

Jeff Brown

Editor, The Bleeding Edge

The Bleeding Edge is the only free newsletter that delivers daily insights and information from the high-tech world as well as topics and trends relevant to investments.

The Bleeding Edge is the only free newsletter that delivers daily insights and information from the high-tech world as well as topics and trends relevant to investments.